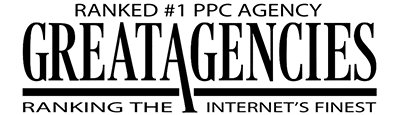

Anyone looking in Google Search Console this week has seen a concerning trendline: dramatically decreased impressions starting around September 10, as seen below. But wait, it’s not you, it’s Google. And it might be a better picture than you think.

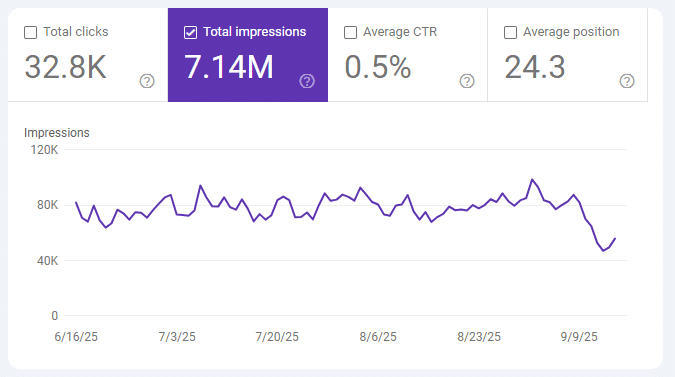

Take a closer look at the data. As in the image below, many sites saw a corresponding increase in their average ranking position and no change to clicks.

That’s an odd pattern, because a decrease in impressions would traditionally mean a decrease in clicks as well. The average ranking position does tend to move in direct contrast with impressions, so that’s less odd. When impressions spike hard or decrease rapidly, the average ranking position typically will show the same trendline in reverse, peaking when impressions crater and vice versa.

So, assuming your clicks are stable, and for many sites they do appear to be despite the decrease in impressions, organic search performance is in less peril than it originally appears. Although, as with any major change, it does bear close scrutiny.

What’s Causing the Drop in Impressions?

There are three potential causes:

- Many sites across the internet actually simultaneously lost impressions during the same week;

- Google had a massive data issue in Search Console that they haven’t announced yet;

- The num=100 search operator was deprecated, uncovering the presence of bot data in Google Search Console’s impression data.

It’s possible that it’s the third option. But what’s num=100? Google has always allowed a way to return 100 listings at a time in its search engine results pages (SERPs) by adding the num=100 parameter onto the URL’s query string. As of September 10, that ability appears to have been removed, though Google has not confirmed its removal yet. What we do know is that it hasn’t functioned this week.

So what? Well, ranking trackers like Semrush used num=100 to quickly pull data. And so do tools like Serp Api, which AI tools like ChatGPT reportedly use to access Google’s search results.

The removal of num=100, and the corresponding drop in impressions, has called into question a number of assumptions about what the data represents and who’s really “viewing” those impressions. It’s possible that all of those bots accessing the data were inflating Google Search Console impression data.

We do not have proof that the drop in impressions in Google Search Console is due to a decrease in bot traffic. The timing lines up, and there is a lot of speculation that makes a certain amount of sense, but nothing has been confirmed.

The Likely Impact on Rankings Tools

Because keyword ranking tools relied on the 100 results view to scrape data, there may be a disruption in keyword reporting or rising costs for retrieving the data. It will now take SemRush, Ahrefs, and all of the others 10 times the time and computing power to scrape 100 results in Google, and therefore will be 10 times the cost while these platforms figure out alternative options. This will likely lead to fewer overall ranking keywords being tracked and reported by these tools past position 10, meaning that your charts in these tools will look artificially low starting September 10. It could also lead to less emphasis from brands on targeting specific keywords in the future, if traditional keyword tracking and monitoring sees significant cost increases that get passed along to businesses.

The Impact on Google Search Console Reporting

With keyword tracking tools using the 100 results view, desktop impressions in particular had been inflated by bots and web scrapers because most of them report results on desktop devices by default. The average ranking position was also affected immensely.

For example, if you had a keyword ranked in position 90, a tracker scraping 100 results would have registered an impression in Google Search Console because the result was technically visible to the tool. With these tools and other web scrapers no longer viewing 100 results at one time, the ranking at position 90 is no longer visible to that scraper — it no longer receives an impression.

So, as desktop impressions drop significantly, the average ranking position is improving significantly because the lower position results are no longer displaying to be counted as impressions.

Search engine optimization (SEO) professionals who report on impression and average position data no longer have an accurate year-over-year set of data to work with, and desktop data might have to be taken with a large grain of salt going forward to gain actionable insights.

Rethinking The Great Decoupling

For the past year or so, “The Great Decoupling,” or the pattern of rising impressions without clicks following suit, has been widely attributed to a continued increase in AI Overviews and additional advanced SERP features displaying in the Google search results.

But two things can be true simultaneously: bot traffic polluting Google Search Console impression data and AI Overviews siphoning off clicks.

While mobile clickthrough trends show that the Great Decoupling is still a valid reasoning, it may not have had as drastic an effect as the SEO community initially suspected. It may have also been due to an increase in bot traffic from ranking tools and from AI tools accessing data through Serp Api. We can’t expect to see clicks and impressions go back to pre-AI Overview era trends, but it will be very interesting to see if we see impression trends approach their pre-decoupling trendlines.

What to Do Now

Audit Google Search Console data, comparing shifts in impressions and average ranking position trends before and after the week of September 10. If mobile impressions and overall clicks remain steadier, but desktop impressions decline, it could be the result of bots previously inflating the numbers. It’s also worth exporting as much historical data as possible from Google Search Console and whichever rank tracking tool you are using to monitor overall historical trends going forward.

Regardless, mark September 10 in your calendars as a date to remember as you report on performance, because it will stand out in your trendlines for the next 16 months to come.

When the reality that the tracking tools paint fluctuates, it makes SEO more difficult. Our whole world is based on using the available data to make smart decisions about how to modify the sites we work with to achieve stronger organic search performance.

But the overarching reality of SEO is that we’ve always worked across a wide array of imperfect tools, cobbling together data that we need to caveat and make allowances for. This is just another example of that. We’ve grown accustomed to trusting Google Search Console data, but this is a reminder that even the gold sources of information can hide impurities.