AI gets a lot of credit right now for being fast, smart, and endlessly capable, especially when it comes to writing. I’ve heard the same questions many people in digital advertising have heard lately: “Can’t AI just write the ads?” And honestly, with how confidently these tools present themselves, it’s totally fair to wonder.

A few weeks ago, I tested a new chatbot and shared it with our team. This sparked broader conversations about which tools people were using, which ones felt the quickest and most accurate, and whether any stood out in ways that actually made a difference in real PPC workflows. With a background in quantitative sociology, it felt natural for me to approach these questions with structure rather than intuition – so I put together a content analysis and comparison to see how different chatbots actually perform when given the same task.

I wanted to keep this grounded, so I chose a real product where I’m part of the target audience and ran a side-by-side comparison of five commonly used chatbots:

- Google Gemini in Google Sheets

- Google Gemini 2.5 Flash

- OpenAI’s ChatGPT 5

- Google’s Notebook LM

- Perplexity’s Comet Browser Assistant

The product in question was a delightfully niche rat-toy subscription box. As a proud owner of two pet rats, often called “fancy rats,” I knew that I’d be able to see which outputs from these chatbots would resonate with me and the needs of my fellow audience of rat owners.

Prompt Structure & Evaluation Criteria

In any study, it’s important to keep things consistent, so I created one standardized prompt and pushed it through all five models without any follow-up instructions. This would demonstrate each chatbot’s raw starting point. The goal here wasn’t to crown a winner, but to understand the nuances of how each one functions and where human judgment is still essential.

With this product being so niche, each chatbot was able to demonstrate its ability to capture tone, specificity, and an understanding of the audience within a strict set of parameters. I asked each model to generate 20 short headlines, 10 long headlines, and 10 descriptions. These text assets all had to be “within Google’s character limits,” which tested their ability to interpret vagueness while also assessing their base knowledge. I included a clear objective of driving sales and requested ad copy tailored to adults ages 22 to 60 who own and love pet rats.

Each AI model’s raw output was evaluated across five metrics:

- Speed and workflow (is the output ready-to-use?)

- Output formatting (is the output clean & error-free?)

- Content quality and creativity (is the output eye-catching & unique?)

- Prompt accuracy and relevance (did the output follow instructions?)

- Strategic depth and audience resonance (is the output persuasive to rat owners?)

These criteria speak to the real considerations that determine whether AI-generated copy is usable as is – or whether it serves as a starting point requiring significant human intervention.

What the Study Revealed

An important note to keep in mind here is that this study is a snapshot in time – it’s indicative of the landscape of AI during the time that I conducted this study (late October 2025). AI is changing every single day, and doing the same test again today, next week, or a month from now could yield very different results.

One of the clearest findings was that no single model performed at the top across all metrics. Each chatbot demonstrated noticeable strengths in some categories and really missed the mark in others. The results highlighted a more nuanced reality – different AI tools excel under different metrics, and choosing the right one depends heavily on the task at hand.

Model-by-Model Breakdown

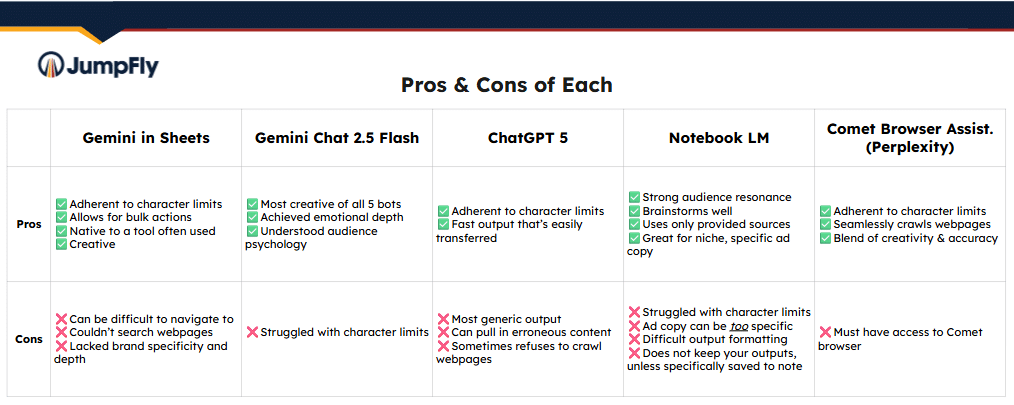

Gemini in Google Sheets

Gemini’s spreadsheet integration stood out for its efficiency and discipline. It delivered fast output, highly consistent formatting, and strong adherence to character limits. For specialists who need clean, rule-following copy quickly, this model performed well. However, its creativity and emotional resonance lagged behind the others. Its outputs read clearly and correctly, but lacked depth. And because Gemini in Google Sheets doesn’t yet have the ability to crawl webpages, the output lacked brand-specific detail.

Gemini 2.5 Flash

Where Gemini in Sheets excelled in speed and discipline, Gemini 2.5 Flash excelled in creativity. This model produced some of the most playful, engaging, and audience-attuned copy in the study. It demonstrated a stronger understanding of what might excite or delight the niche pet audience. The tradeoff here is that this creativity often came at the expense of formatting consistency, frequently exceeding character limits for each text asset. Gemini 2.5 Flash clearly has value, especially in ideation, but usually requires a specialist to refine and tighten the results – all that time spent editing can add up quickly!

ChatGPT 5

In this study, ChatGPT 5 generated the safest, most generic copy overall. It followed the prompt and rarely broke character limits, indicating that it was a dependable, if basic, baseline. Despite this, the phrasing frequently defaulted to broad, repetitive language that didn’t differentiate the product or speak uniquely to the intended audience. It also struggled to fill the character counts efficiently, often clocking in at 60 to 70 characters for a long headline or description. While the copy was technically sound, it lacked the originality and strategic nuance needed for strong performance against competitors on the SERP. ChatGPT also occasionally added extraneous or incorrect information – it wrote ad copy that said “20% off!” when there was no mention of a sale anywhere on the site. The time it can take for specialists to verify outputs from this chatbot can eat up all the time saved by initially using this tool.

Notebook LM

While many use Notebook LM as an AI study-buddy, it showed moments of creativity and thematic variation when used as an ad copywriter. Because it relies solely on the source material you give it, it can be helpful during early brainstorming. However, it did struggle significantly with character limits and consistency, making it less suited for direct application in the tightly structured format of Google Ads. Its strengths leaned more toward conceptual exploration rather than toward ready-to-deploy text assets, making it a great tool for a niche ad where you want the output to be hyper-specific.

Comet Browser Assistant

Of all the chatbots tested, Comet was the most balanced performer across all five evaluation categories. It didn’t take the top spot in creativity or strategic depth, but it avoided major weaknesses and delivered dependable, well-structured output. Its copy blended clarity, accuracy, and audience relevance more evenly than the others, making it the steadiest “all-around” option in the study.

What This Means for Advertisers

This comparison ultimately showed that AI can move quickly, generate volume, and spark ideas, but it doesn’t yet understand the strategic nuances that drive performance. None of the models consistently balanced creativity, accuracy, formatting discipline, and audience insight in a way that replaces human decision-making.

And this is precisely how we approach AI within paid search advertising. We see these tools as accelerators, not autopilots. They help our specialists explore ideas faster and work more efficiently, but the final decisions still come from people who understand the brand, the audience, and the platform dynamics. AI provides momentum, but the direction still comes from experience.

The goal of this study wasn’t to find the perfect chatbot – it was to understand how these tools behave in real PPC tasks and to determine which one is best suited to the task at hand. The results were clear: every model contributed something valuable, but each also required interpretation, refinement, and direction. That’s the space where human expertise remains irreplaceable.

AI can generate a starting point, but it can’t yet determine what will resonate with a specific audience, or when a headline is technically correct but strategically empty – those choices still rely on people.

It’s not about choosing between human creativity and AI. We’re choosing the best of both: AI for speed and exploration, and humans for insight and strategy. When paired thoughtfully, the two complement each other, and the work is stronger for it.